Google has consistently been updating its Search Algorithms by prioritizing users’ persona over a few years. This year early in September, they officially announced the launch of the new Google Search Console. In no less than 50 days, another Search Algorithm update the Google’s BERT update is flashed up into the market.

If you are wondering if this revision is just like Core update, Penguin or Hummingbird, then the answer is No. This new BERT update is considered to be one of the most significant search algorithm updates after the 2015 RankBrain.

In this article, we have focused on providing you information about:

- What is BERT update?

- What are its features?

- When is it used?

- How it affects your search?

- Should you optimize accordingly?

- What are the Pros and cons?

All you must need to know about the update:

You probably must be wondering what this BERT is? Well, it is an abbreviation for Bidirectional Encoder Representations from Transformers. Now, what is this? It is what we are about to tell you.

Last year Google launched an open-sourced neural network-based approach for Natural Language Processing (NLP). This technique is called BERT. This technology is used to facilitates anyone to create their own state-of-the-art question and answer system.

That means, it is not always necessary that you or I will always search using grammatically constructed sentences. A lot of people browse using a string of words related to their query.

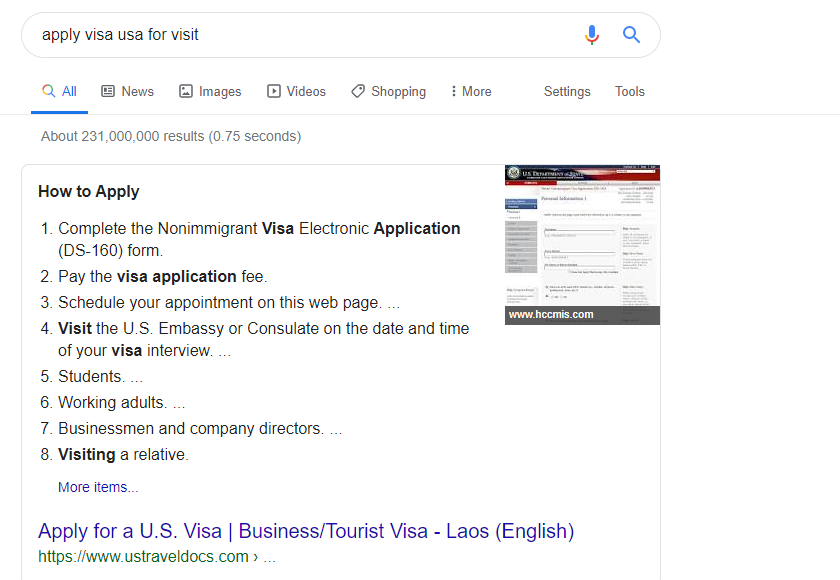

Source: Google Search

But Google already has autocomplete feature to figure out what your query is about, then what is this update for? Let’s see what is this about.

No matter how many updates have been made in the breakthroughs, still the results of the inquiry mismatch in case of complex queries. Hence, The new update is about improving the understanding of the inquiries made and providing the user with related information.

However, this revision is the result of Google research on transformers: models which process words that are in relation to all the other words in a given sentence of search, rather than one-by-one in sequence.

Now, let us glance at the features of the revised algorithm.

What are the Features of BERT?

The revisions in the search algorithm have not been confined to updates in software alone. There were new hardware advancements too. For the first time, Cloud TPU’s have been used in breakthroughs.

The cloud TPU’s usually power Google products like Translate, Photos, Search, Assistant, and G-Mail. The TPU,s, or Tensor Processing Units have been linked up with search algorithms to serve search results and provide users with the most relevant information.

However, this update is only confined to US English and shall be implemented in other languages over time.

Let us understand it’s usage in detail.

When is BERT used?

BERT usually comes into consideration for understanding the search inquiries in a better way and give relevant results. This revision is also applicable for snippets.

However, this doesn’t mean Google’s BERT update has replaced RankBrain. This BERT Google Natural Language Processing (NLP) update is additional support for a clear understanding of your query and to provide you with relevant results.

Let us now understand how this update affects our search.

How does BERT affect search?

According to Google, the BERT update will affect 1 in 10 of the search queries of the US.

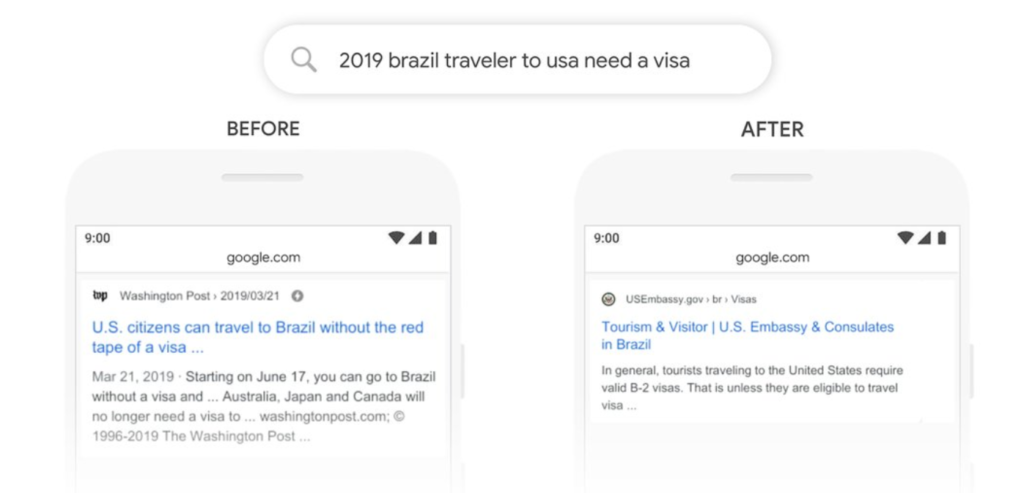

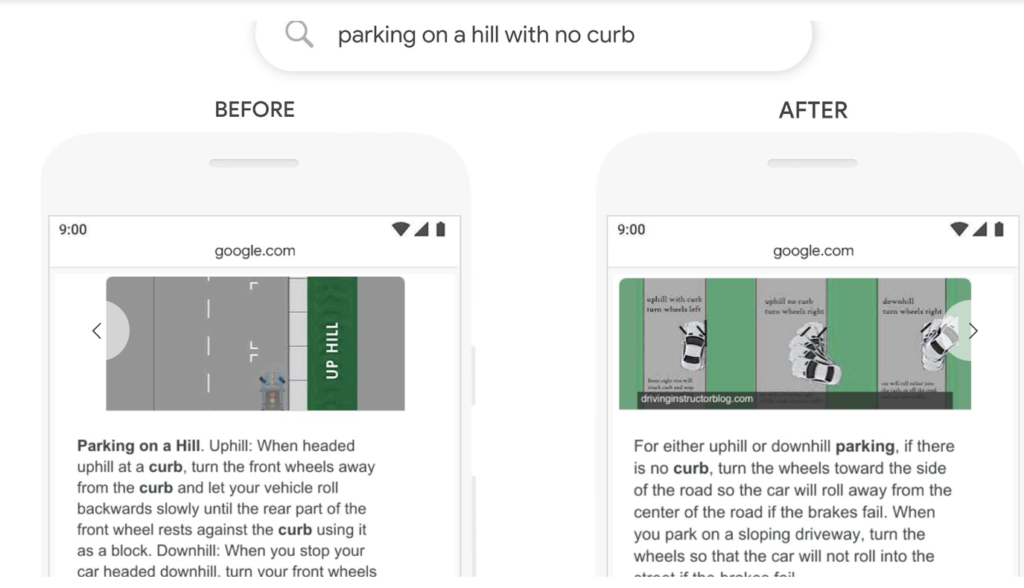

For example, if you are searching for “2019 brazil traveler to the USA need a visa”. Your results before BERT Update and after BERT update are as follows.

Source: Google Blog

Earlier, the search results were more focused on how the USA citizens can travel to Brazil. Later, search results are optimized and refined to how Brazilians can get a visa of the USA.

The search algorithm is now taking prepositions like “for” or “to” into account while performing a search to the search query.

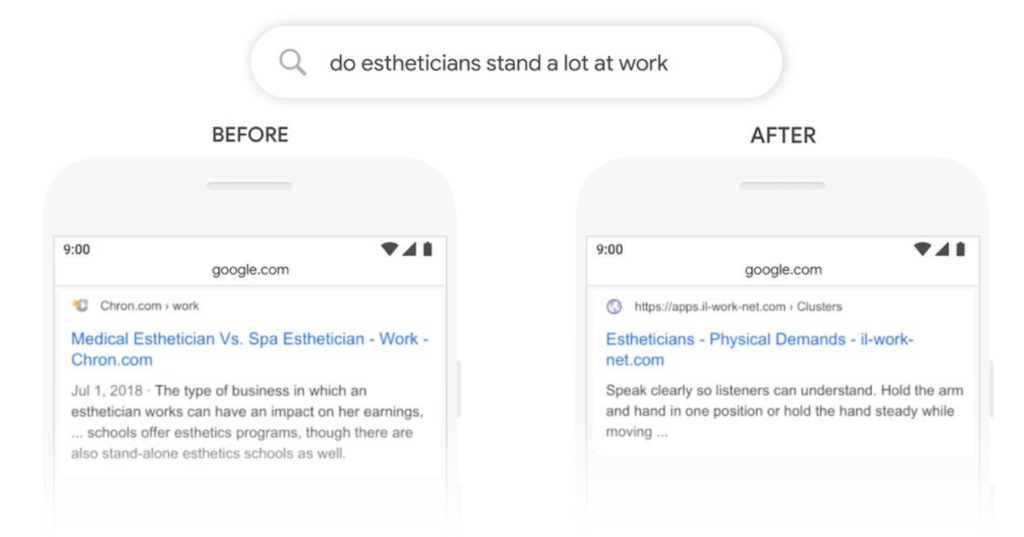

Another example, if you wanted to know “do estheticians stand a lot at work.” Your results before Google BERT Update and after BERT update would be:

Source: Google Blog

Hence it can be observed that Google is now able to understand the meaning of “stand” as a different word rather than matching it with “stand-alone.”

Does Google’s BERT Update Only Effect Search Queries?

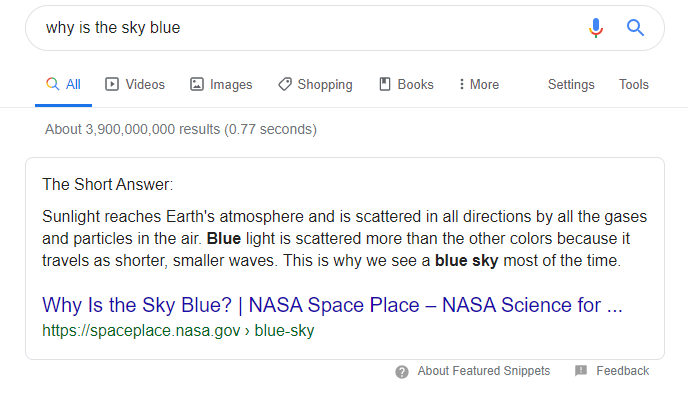

No, the Google BERT update is not just limited to search queries alone. It is globally live for featured snippets too.

A featured snippet is nothing but a brief answer to your query. It appears on top of the Google search results. It’s usually extracted from the content of a web page and includes the title and URL of the page.

With Google BERT updates, these featured snippets are also modified, and the results would be as follows:

Source: Google Blog

You can see the difference for yourself. Isn’t it huge?

So, it is observed that the update is now able to understand the usage of each preposition and provide us with results accordingly.

Now a big question for all the digital marketers is, should they optimize accordingly or not?

What is the Architecture of BERT?

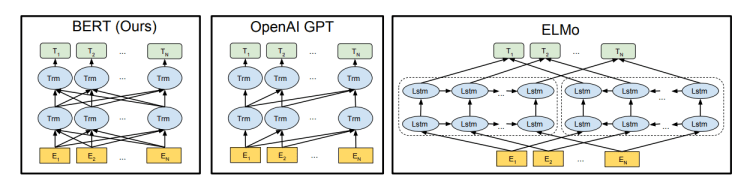

BERT works on the Transformer mechanism, and this is an attention mechanism that analyses contextual relations among words (or sub-words) in a given text. The transformer mechanism is further enclosed with two separate mechanisms — an encoder which helps in reading the text input and a decoder that helps in producing a prediction for the task. However, BERT’s key focus is to generate a language model. Hence, only the encoder mechanism is necessary.

Instead of predicting the next word of the sentence in a sequence, BERT makes use of a novel technique called the Masked LM (MLM): it randomly masks words of the sentence and then tries to predict the words. Masking means the model looks in both directions. It uses the full context of the sentence, and checks both left and right surroundings, in order to predict those masked words. Unlike the earlier language models, it takes both the previous and next tokens into account into consideration at the same time. The pre-existing combined left-to-right and right-to-left LSTM based models were missing this concept of “same-time part.”

In the above image, visualization of Google BERT’s neural network architecture is compared to previous state-of-the-art contextual pre-training methods is shown. The arrows indicate the flow of information from one layer to the next layer. The green boxes at the top indicate the final contextualized representation of each input word.

However, the three pre-training methods differ from one another. BERT is deeply bi-directional, OpenAI GPT is unidirectional, whereas ELMo is shallowly bidirectional.

Should you optimize your SEO accordingly?

Google says that

“It’s our job to figure out what you are searching for and surface helpful information from the web, irrespective of how you spell or combine the words in your query ”

So, this is an effort by Google for a better understanding of the searcher’s query and to match it with more relevant results. However, this algorithm update is not intended to penalize any site.

Hence, instead of focusing on optimization, focus on writing content for users, like you usually do. However, we suggest you to keep a check on search traffic and optimize according to the search trends.

What are the Pros and Cons of Google BERT Update?

As every coin has two sides, every update has its own pros and cons. This revision helps the search results get better by understanding the natural language of the user. But, it is going to affect one in ten searches of the US. Implying 10% of the total searches shall be affected due to this update, which is huge.

This implies soon, Google will no longer focus on Keyword density but try to understand the content of the text. However, Language understanding remains an ongoing challenge for the giant search engine.

What do we suggest?

Though the methods of search have been refined, there is still an opportunity for you to rank on top. Here are a few tips for you to improve your SEO concerning BERT:

Use Long Tail Words:

As mentioned earlier, this update is a step of Google to understand searchers’ queries. The search results are based on the phrases used by the enquirer. Hence, use long-tail keywords related to the topics which match with any related phrase used by the enquirer.

If you don’t know how to generate long-tailed keywords, use tools like Semrush, UberSuggest, Keyword tool.

These tools provide you with related keywords, long-tail keywords, and their density. This will help you decide which keyword you have to focus on while doing your SEO.

Do not focus on article length:

It is an old followed tradition in digital marketing and content marketing to write lengthy articles. But, with the Google BERT update length of the article is not as relevant as it was. This doesn’t mean long articles will not be considered. Articles which are more relevant to users concern will rank on the top of the search.

So, instead of focusing on the length of the article, focus more on human understanding, readability, and content relation.

To check your readability, you can use tools like Hemingway or Grammarly.

Conclusion:

It is for sure that due to this update, sites will lose some traffic. Do not worry if you lost your traffic or conversion rates. Take this as an opportunity to develop content which is Super-Specific to the user persona.

Try to answer user concerns and question more in your article. If you do not know what questions you need to answer, then use tools like answer the public are Google autocomplete suggestions, FAQ, and Quora.

We hope that this article was of excellent help for you in understanding the BERT update.

We would love listening to your view about how this Google Update. So, do write to us in the comment section below or contact us.

Your posts are extremely cool. I am glad to be here. I enjoyed reading your articles and I would like to bookmark your posts.

Good Article and Nicely presented.

Views from an SEO perspective are well documented.

End of the day, Google is a big ocean and Google search is the most used search engine….But how and what to search, is still a Million Dollar question!!!..

Keep up the good work and start posting more success from SEO.

wow, amazing information with the example, thanks for keep users up to date.

You are absolutely right and till morning i search over net how to solve BERT update puzzle and your blog has all information which i will used on my website…. thanks

Very Nicely explained. I was in a confused state after seeing my traffic is 50% of normal after BERT. Your suggestions will help me and others to rework and come back stronger. thank you.

Hi, I was searching through the net to find out some posts which will help me to bring back my traffic that I lost end of last week. Thanks for your post. I will follow the steps

Hi Sailaja, I knew about Google’s BERT Update but I didn’t understand it. Thanks for your post.

Today, Emblix as one of the best and top most service-oriented Digital Marketing Agency in Hyderabad and India , Which provides high-quality result-oriented Digital Services ranging from SEO to Web Design, Social Media Marketing and more, to a broad spectrum of clients from diverse industry segments. Through a well-oiled combination of Quality Solutions, Transparent Pricing, helping brands connect with customers, Flexible Delivery & Contract Model with a firm commitment to deliver on time and to budget, Emblix has successfully built a strong relationship with clients based on mutual trust and respect. Further, Emblix’s extensive market experience and expertise in Digital Marketing helps clients in successfully managing data as a strategic asset.